Implementing health checks

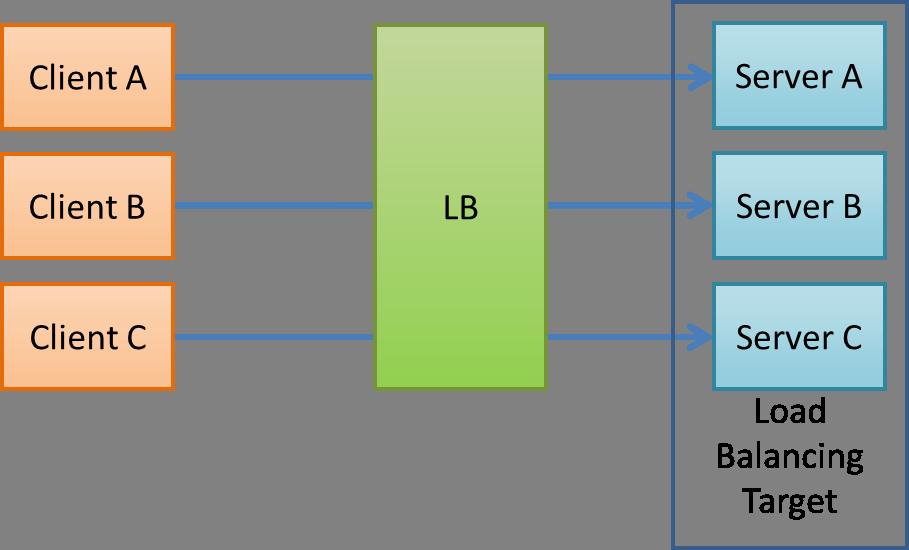

Health checks are a way of asking a service on a particular server whether it can perform its work normally. The load balancer periodically asks each server this question to determine which servers are safe to forward traffic to. A service that polls for messages from a queue may ask itself if it is healthy before deciding to poll more work from the queue. A monitoring agent (running on each server or an external monitoring fleet) can check whether a server is healthy and raise alarms or automatically react to failed servers.

As you saw in my website bug example, unhealthy servers continuing to serve can disproportionately reduce the availability of the entire service. In a fleet of 10 servers, one bad server means the fleet's availability is 90% or less. Worse, some load balancing algorithms, such as "Least Requests", give more work to the fastest servers. When a server fails, it often starts failing requests quickly, creating a "black hole" in the service fleet by attracting more requests than healthy servers. In some cases, additional protections are added to slow down failed requests to prevent blackholing and match the average latency of successful requests. However, there are other scenarios where this issue is more difficult to avoid, such as queue pollers. For example, if a queue poller is polling as fast as it can receive messages, a failed server is also a black hole. With such a diverse set of environments for distributing work, the way you think about protecting a partially failed server depends on your system.

Disks that become unwritable and requests fail immediately, clocks that suddenly skew and dependency calls fail to authenticate, cannot get updated cryptographic material, causing decryption and encryption to fail Servers are known to fail independently for a variety of reasons, including critical support processes crashing due to bugs, memory leaks, and deadlocks that freeze processing.

Servers also fail for correlated reasons that cause many or all servers in a fleet to fail at the same time. Correlated reasons include dead shared dependencies and massive network issues. An ideal health check would test all aspects of server and application health, perhaps even verify that noncritical support processes are running. However, trouble arises when health checks fail for non-critical reasons and the failures are correlated across servers. Automation does harm when it removes a server from service when it could still do useful work.

The difficulty of health checks lies in this tension between the benefits of exhaustive health checks on the one hand and the benefits of rapid mitigation of single server failures on the one hand, and false positive failures across the fleet on the other. I have. So one of the challenges of building a good health check is carefully preventing false positives. In general, this means that the automation surrounding health checks will stop directing traffic to a single bad server and continue to allow traffic if the entire fleet appears to be having problems.